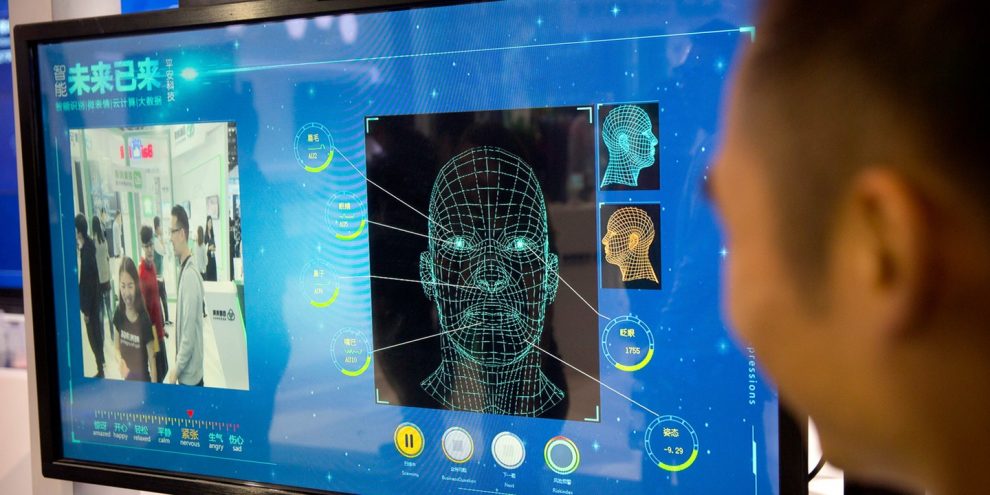

American psychologist Paul Ekman’s research on facial expressions spawned a whole new career of human lie detectors more than four decades ago. Artificial intelligence could soon take their jobs.

While the U.S. has pioneered the use of automated technologies to reveal the hidden emotions and reactions of suspects, the technique is still nascent and a whole flock of entrepreneurial ventures are working to make it more efficient and less prone to false signals.

Facesoft, a U.K. start-up, says it has built a database of 300 million images of faces, some of which have been created by an AI system modeled on the human brain, The Times reported. The system built by the company can identify emotions like anger, fear and surprise based on micro-expressions which are often invisible to the casual observer.

“If someone smiles insincerely, their mouth may smile, but the smile doesn’t reach their eyes — micro-expressions are more subtle than that and quicker,” co-founder and Chief Executive Officer Allan Ponniah, who’s also a plastic and reconstructive surgeon in London, told the newspaper.

Facesoft has approached police in Mumbai about using the system for monitoring crowds to detect the evolving mob dynamics, Ponniah said. It has also touted its product to police forces in the U.K.

Christian university rebranding DEI to evade Trump order, enroll illegals, Tennessee rep says

Mike Huckabee Threatens to Declare Israel ‘No Longer Welcoming’ to Christian Organizations After ‘Shocking’ Policy Change

Former Voice of America Staffer Charged Over Alleged Death Threats Against Marjorie Taylor Greene

NIH still blaming FOIA delays on the pandemic

New York settles Andrew Cuomo sexual harassment case for nearly $500,000

Americans detained in Venezuela freed and returning home after prisoner exchange

Trump sues Wall Street Journal for libel after Epstein birthday letter story

Trump vows to make US ‘crypto capital of the planet,’ signs GENIUS Act into law

Illegal pleads guilty to impregnating his own daughter at blue state migrant shelter

Watch: Rare ‘Firenado’ Captured on Video as it Cuts a Swath of Destruction

FBI captures final illegal immigrant inmate who escaped ICE facility in New Jersey

Illegal Alien Claimed She Was Illegally Kidnapped by ICE – Then the Surveillance Footage Was Located

Germany admits Europeans were ‘free riders’ on defense and national security

Border Patrol Hits Jackpot in Raid on California Home Depot

Missing mom’s convicted killer claims boyfriend tainted his trial

The use of AI algorithms among police has stirred controversy recently. A research group whose members include Facebook Inc., Microsoft Corp., Alphabet Inc., Amazon.com Inc. and Apple Inc published a report in April stating that current algorithms aimed at helping police determine who should be granted bail, parole or probation, and which help judges make sentencing decisions, are potentially biased, opaque, and may not even work.

The Partnership on AI found that such systems are already in widespread use in the U.S. and were gaining a foothold in other countries too. It said it opposes any use of these systems.

Story cited here.