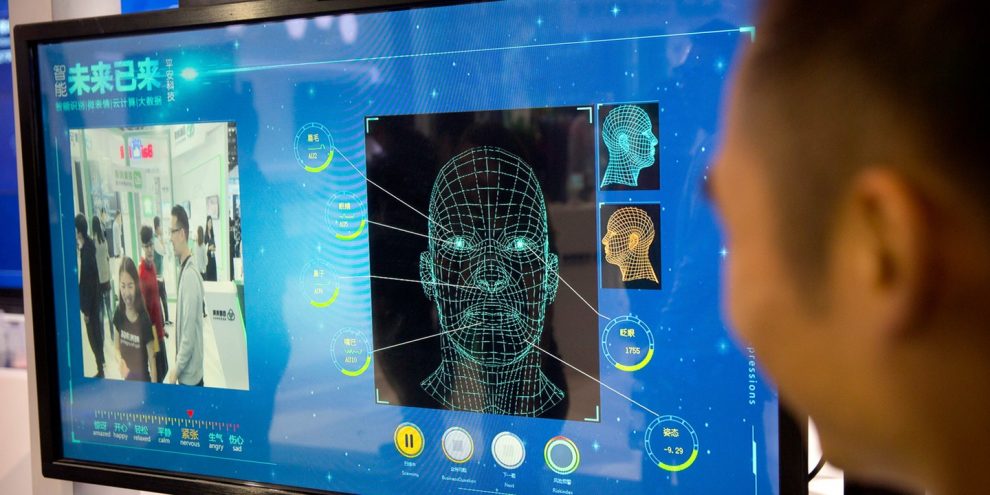

American psychologist Paul Ekman’s research on facial expressions spawned a whole new career of human lie detectors more than four decades ago. Artificial intelligence could soon take their jobs.

While the U.S. has pioneered the use of automated technologies to reveal the hidden emotions and reactions of suspects, the technique is still nascent and a whole flock of entrepreneurial ventures are working to make it more efficient and less prone to false signals.

Facesoft, a U.K. start-up, says it has built a database of 300 million images of faces, some of which have been created by an AI system modeled on the human brain, The Times reported. The system built by the company can identify emotions like anger, fear and surprise based on micro-expressions which are often invisible to the casual observer.

“If someone smiles insincerely, their mouth may smile, but the smile doesn’t reach their eyes — micro-expressions are more subtle than that and quicker,” co-founder and Chief Executive Officer Allan Ponniah, who’s also a plastic and reconstructive surgeon in London, told the newspaper.

Facesoft has approached police in Mumbai about using the system for monitoring crowds to detect the evolving mob dynamics, Ponniah said. It has also touted its product to police forces in the U.K.

US signals readiness to escort tankers through Hormuz as traffic thins but no mission launched

FLASHBACK: Dem Senate nominee called illegal aliens ‘constituents,’ gave advice on evading ICE

Hegseth Honors Americans Killed in Action as Operation Epic Fury Enters Second Week: ‘Their Deaths Will Not Be in Vain’

Tourist’s bird-brained Vegas stunt with flamingo lands him behind bars on felony charges

BREAKING: GOP Rep. Nancy Mace Plans to Investigate Outgoing DHS Secretary Kristi Noem: ‘We Need to Hold Our Own Accountable’

Fox News True Crime Newsletter: Nancy Guthrie’s clues, Luigi Mangione’s evidence, Idaho murders tarot cards

Democrats pressure Mike Johnson to keep House in Washington over ‘rapidly developing’ Iran operation

Newsom declares ‘Trump is in retreat’ after Noem ouster, demands Miller be ‘next’

Pentagon Declares Major AI Company a Threat to Military Supply Chain

Trump Lays Out His Demands to Iranian Leaders, Vows to ‘MIGA’ If They Comply

Farage heads to Mar-a-Lago to reinforce Trump’s opposition to Chagos Islands deal

Khamenei’s secret bunker under Tehran destroyed by Israeli military jet bombardment

53 Dems vote against declaring Iran a state sponsor of terror

Capitol Hill Dems hail Trump’s DHS ouster of Noem after heated Senate hearing

FBI Agents Search New Home in Nancy Guthrie Disappearance

The use of AI algorithms among police has stirred controversy recently. A research group whose members include Facebook Inc., Microsoft Corp., Alphabet Inc., Amazon.com Inc. and Apple Inc published a report in April stating that current algorithms aimed at helping police determine who should be granted bail, parole or probation, and which help judges make sentencing decisions, are potentially biased, opaque, and may not even work.

The Partnership on AI found that such systems are already in widespread use in the U.S. and were gaining a foothold in other countries too. It said it opposes any use of these systems.

Story cited here.