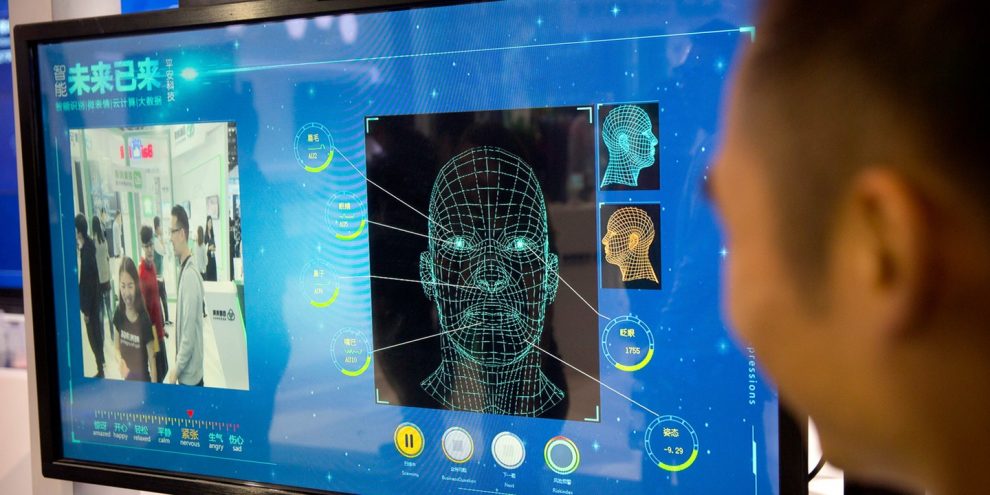

American psychologist Paul Ekman’s research on facial expressions spawned a whole new career of human lie detectors more than four decades ago. Artificial intelligence could soon take their jobs.

While the U.S. has pioneered the use of automated technologies to reveal the hidden emotions and reactions of suspects, the technique is still nascent and a whole flock of entrepreneurial ventures are working to make it more efficient and less prone to false signals.

Facesoft, a U.K. start-up, says it has built a database of 300 million images of faces, some of which have been created by an AI system modeled on the human brain, The Times reported. The system built by the company can identify emotions like anger, fear and surprise based on micro-expressions which are often invisible to the casual observer.

“If someone smiles insincerely, their mouth may smile, but the smile doesn’t reach their eyes — micro-expressions are more subtle than that and quicker,” co-founder and Chief Executive Officer Allan Ponniah, who’s also a plastic and reconstructive surgeon in London, told the newspaper.

Facesoft has approached police in Mumbai about using the system for monitoring crowds to detect the evolving mob dynamics, Ponniah said. It has also touted its product to police forces in the U.K.

UN nuclear watchdog says Iran nuclear site damaged in strikes

Justice Department’s new policy saves $1.6 million in taxpayer dollars previously spent on transgender procedures

Supreme Court blocks California ban on notifying students’ parents about gender transitions

Cornyn wants to work with Trump to fix ‘broken’ immigration system if reelected

BREAKING: US Embassy in Riyadh, Saudi Arabia Hit by Multiple Iranian Drones

Markets Handle Iran Strikes Brilliantly as S&P 500 Has Biggest Intraday Recovery in 5 Months Finishing Positive for the Day

Trump sends official notification to Congress on strikes against Iran

Virginia Mom Allegedly Murdered by Illegal Alien with 30 Priors Only Weeks After Dem Governor Blocks ICE Cooperation

Department of Education Honors Charlie Kirk with Banner as Part of America’s 250th Birthday Celebration: ‘Heroes in American Education’

Watch: Responding to an ABC Reporter, Trump Just Issued the Coolest Wartime Quote in Presidential History – It’ll Never Be Topped

Iran Update: Trump Says Attack Is Moving Much Faster Than Expected With 1,200 Targets Hit and the Entire Iranian Navy Neutralized

Musk, xAI tout newest Grok update as only ‘non-woke’ platform: ‘Doesn’t equivocate”

Trump admin warned lawmakers Israel was ‘determined to act with or without us’ before massive Iran strikes

Tarot influencer’s claims in Idaho college murders case spark courtroom reckoning

New Jersey-bound United flight makes emergency landing at LAX after engine fire

The use of AI algorithms among police has stirred controversy recently. A research group whose members include Facebook Inc., Microsoft Corp., Alphabet Inc., Amazon.com Inc. and Apple Inc published a report in April stating that current algorithms aimed at helping police determine who should be granted bail, parole or probation, and which help judges make sentencing decisions, are potentially biased, opaque, and may not even work.

The Partnership on AI found that such systems are already in widespread use in the U.S. and were gaining a foothold in other countries too. It said it opposes any use of these systems.

Story cited here.